Nearly forgot again – it only seems a couple of days since I wrote last week’s notes. Oh right, it is. I’m also mildly surprised that I seem to have been correctly incrementing the week number every time.

A bit of routine medical (or medical-adjacent) stuff this week. On Wednesday I gave blood for the first time in a year, after being suspended for not maintaining proper haemoglobin levels. All was well this time though, so I got to earn my ginger nuts. Then I cycled into the office (well to be pedantic I stopped at the bike shed and walked the rest of the way), mainly to take advantage of a walk-in Covid booster session. This seemed convenient on the face of it, but actually involved taking a ticket and waiting the best part of an hour for my turn, as opposed to the previous ones I had at a pharmacy, where I was in and out in ten minutes. Anyway, I’m now bivalently boosted, so should be better defended against Omicron than before.

Thursday was my check-up at the dentist, and I actually managed to turn up on the right day this time. All teeth present and correct (well apart from the ones which were already missing).

We had our first club track session of the year on Friday evening, which featured blustery winds and rain. Lovely. Just before I left the house for that, another massive branch ripped itself off the apple tree, filling up another large amount of lawn (well “lawn” is pushing it a bit, given I haven’t mowed it for years). That wasn’t the branch I expected to go next, so on Sunday I went up a ladder and trimmed a large amount off that one, so that if it were to decide to join its colleagues in their groundward journey it wouldn’t take the hedge with it. A friend has offered to help chop up the wood and take it away for firewood, but in the meantime the bits I sawed off have taken another section of lawn.

I think this technically belongs with last week’s notes, but I got some more badger footage from the infrared camera (this one is it eating a crust of bread I left out):

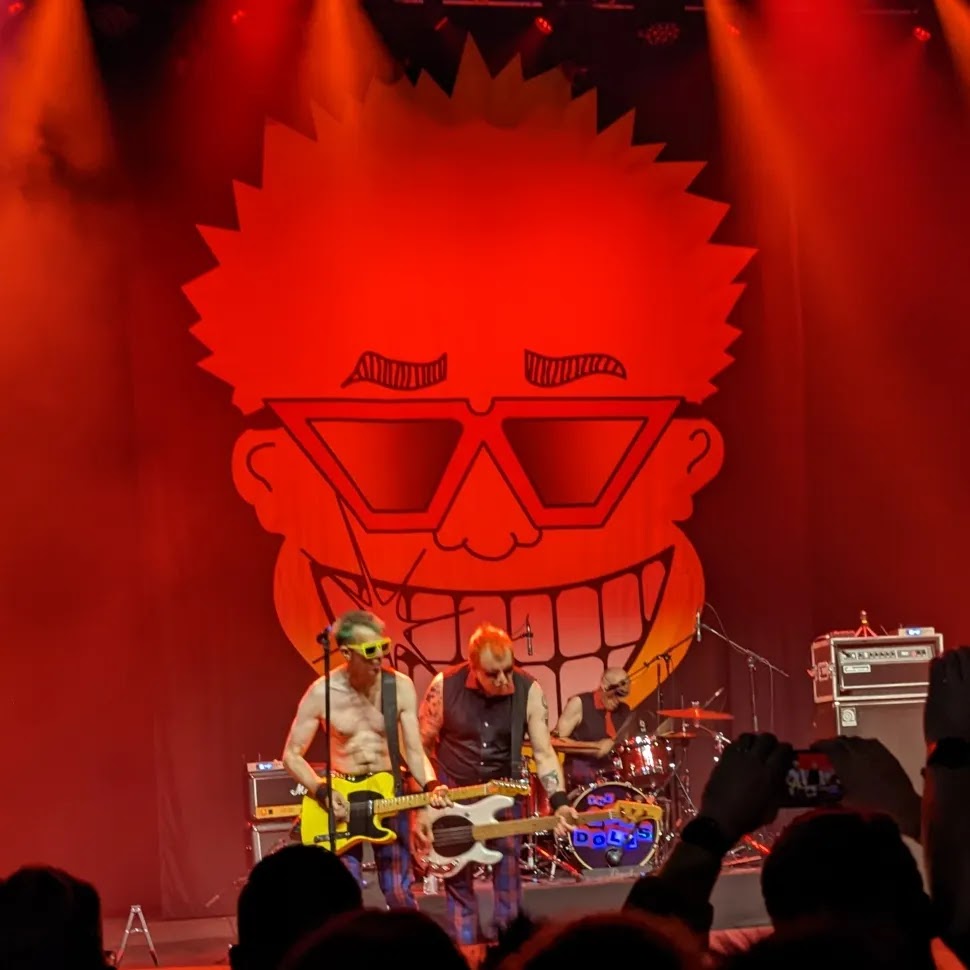

On Saturday I drove to Norwich for a much-delayed Frank Turner show at the UEA. It’s the first time I’ve seen him since he cut short his 2020 show the day before I was due to go (and a couple of days before the UK lockdown kicked in). He was actually supposed to be coming to Ipswich in 2021 for the first time in forever, but that got cancelled too in one of the later Covid waves. It was worth the wait though, despite the occasional shooting pain down my arm when people crashed into me and triggered whatever almost trapped nerve thing has been lurking in my right shoulder for years.